Algorithms¶

Optimal segmentation¶

The most probable segmentation is the one that has the greatest (log) likelihood, or equivalently, is the lowest energy configuration of our system (the ground state). If we place the segment energies on the edges of the segmentation graph, and sum the weights along any given path from source to sink, then this becomes a straightforward case of finding the shortest (longest) path on this directed acyclic graph.

The optimal segmentation can be found in \(O(N^2)\) time by dynamic programming [Fillipova et al].

In the graphical models terminology, this algorithm goes by the name max sum. It can be further optimized (linearly bounded) if a maximum segment length is imposed.

Marginal probabilities¶

Sub-partition functions¶

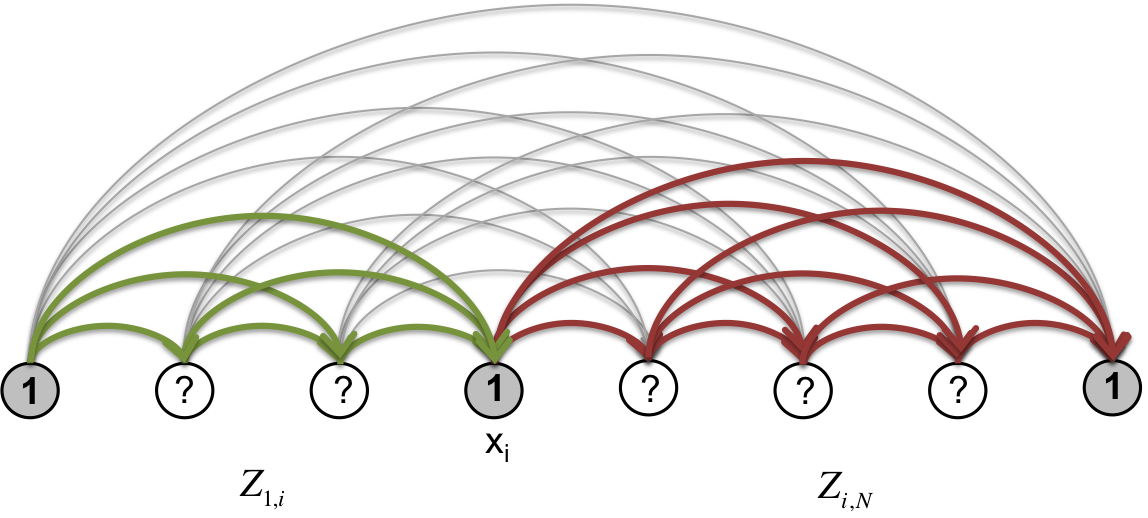

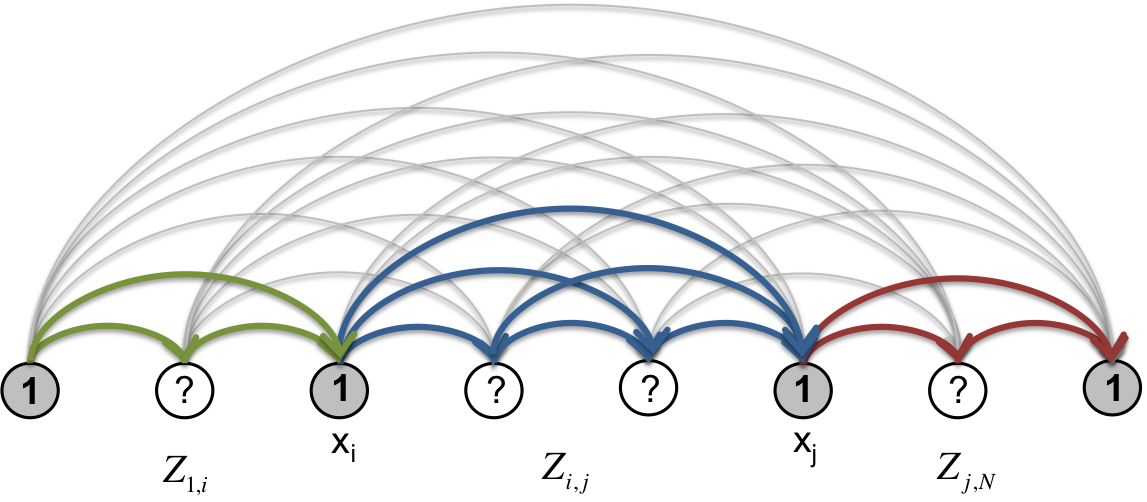

Now, if we place the segment statistical weights on the edges of the segmentation graph and take the product of weights along any given path from source to sink, then we can obtain the partition function \(Z(\beta)\) by summing over all paths starting at node \(0\) and ending at node \(N\). Furthermore, we can consider segmentation “subsystems” spanning nodes \(i\) through \(j\) (\(i < j\)) and similarly compute the sub-partition function \(Z_{i,j}\) by summing over paths in that subsystem (see Fig. 6, Fig. 7). The full system partition function \(Z\) is equivalent to \(Z_{0,N}\). We have the following recursion relations:

Forward sub-partition functions:

Backward sub-partition functions:

This forms the basis of a dynamic programming algorithm that goes by several names: sum-product, forward-backward, belief-propagation, and others.

Marginal boundary probabilities¶

With these forward and backward statistical weight sums, the marginal probability of each node being a boundary is obtained from

Fig. 6

Hence, although the number of segmentations is exponential in the number of nodes, it is possible to compute the partition function and marginal boundary probabilities in \(O(N^2)\) time.

One can go further and compute the entire set of \(Z_{ij}\) in \(O(N^3)\) and obtain co-occurrence probabilities for all pairs of nodes. The marginal probability of two nodes co-occurring as segment boundaries is given by

Fig. 7

This formula can be generalized to any number of boundaries.

Marginal segment probabilities¶

We can make use of the forward and backward sub-partition functions to efficiently compute two other quantities of interest.

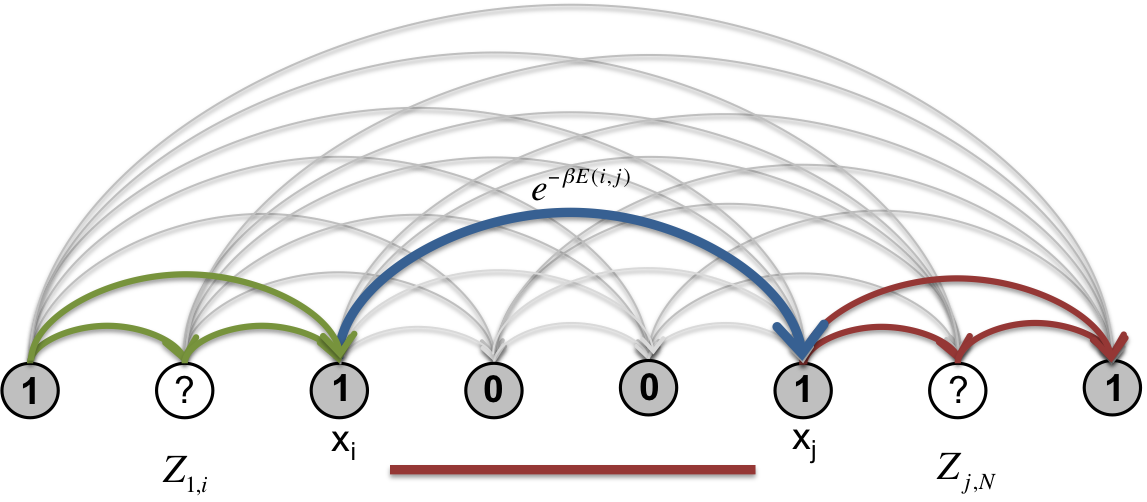

The marginal probability of the occurrence of a specific segment \([i,j)\) is given by

Fig. 8

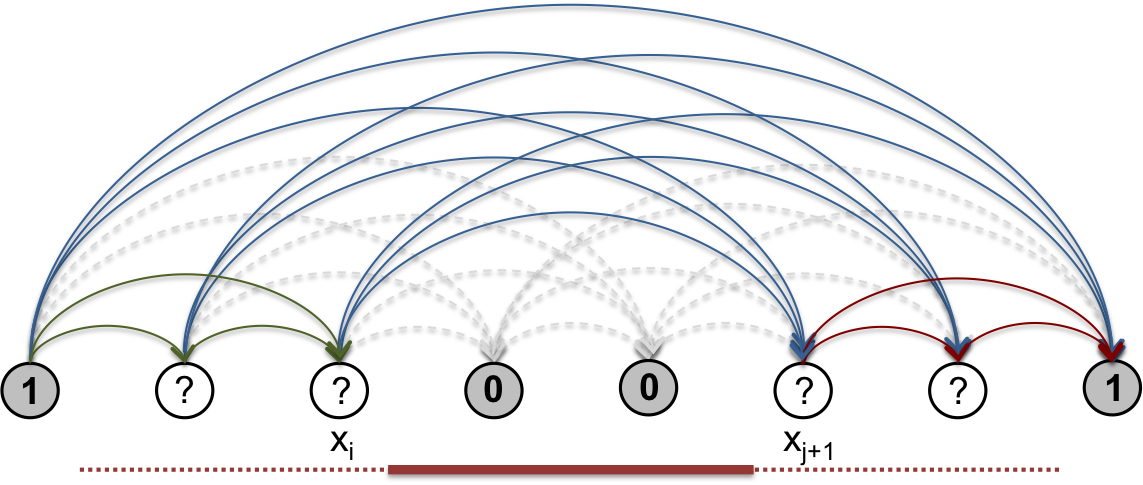

With the forward and backward statistical weights computed as in the previous section, we can further apply dynamic programming to sum up all paths such that \(i\)th and \(j\)th genomic bin co-occur in a common segment. By computing this for all pairs of bins, the resulting marginal segment co-occurrence matrix gives a representation of the ensemble that is easy to compare visually to the original Hi-C matrix. The entire procedure does not exceed \(O(N^2)\).

Fig. 9

Sampling¶

It is possible to obtain independent samples from the ensemble by performing stochastic backtracking walks on the segmentation graph. First, one must pre-compute the forward sub-partition functions. Then to generate samples one proceeds as follows:

- Start at the sink boundary node \(N\). Choose a predecessor boundary node \(k\) from the \(N-1\) available choices by sampling the discrete distribution whose probabilities are given by:

- Continue the backward random walk by sampling predecessor nodes \(k'\) until the source node \(0\) is reached.

Alternatively, one can use the backward subpartition functions and stochastically walk from \(0\) to \(N\).

Hence, samples can be generated without the need for Markov chain-based sampling methods (e.g. Metropolis sampling), which produce correlated samples.